Mounting a Finding Aids Collection

From DLXS Documentation

(→Specific Encoding Issues) |

(→Examples of Findaid Class Implementations and Practices) |

||

| (334 intermediate revisions not shown.) | |||

| Line 1: | Line 1: | ||

[[DLXS Wiki|Main Page]] > [[Mounting Collections: Class-specific Steps]] > Mounting a Finding Aids Collection | [[DLXS Wiki|Main Page]] > [[Mounting Collections: Class-specific Steps]] > Mounting a Finding Aids Collection | ||

| - | |||

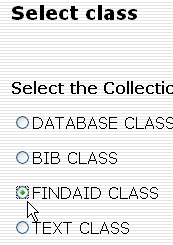

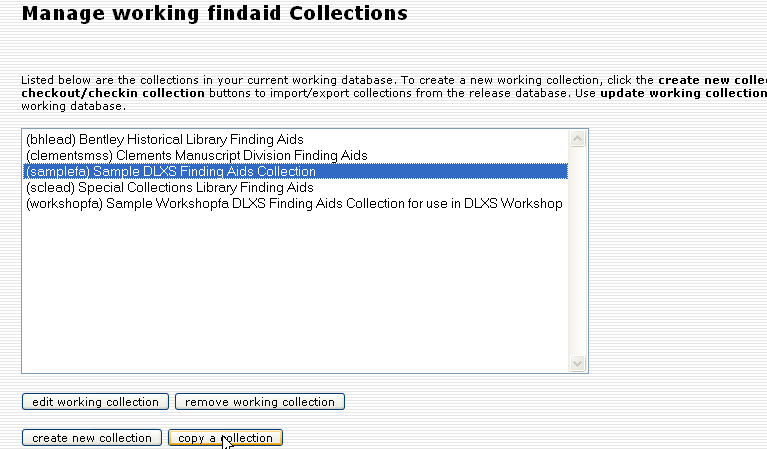

This topic describes how to mount a Findaid Class collection. | This topic describes how to mount a Findaid Class collection. | ||

| - | + | ==Overview== | |

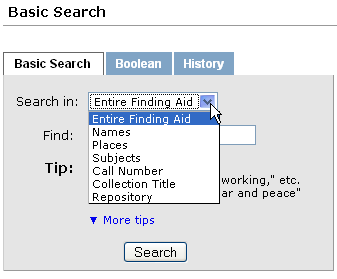

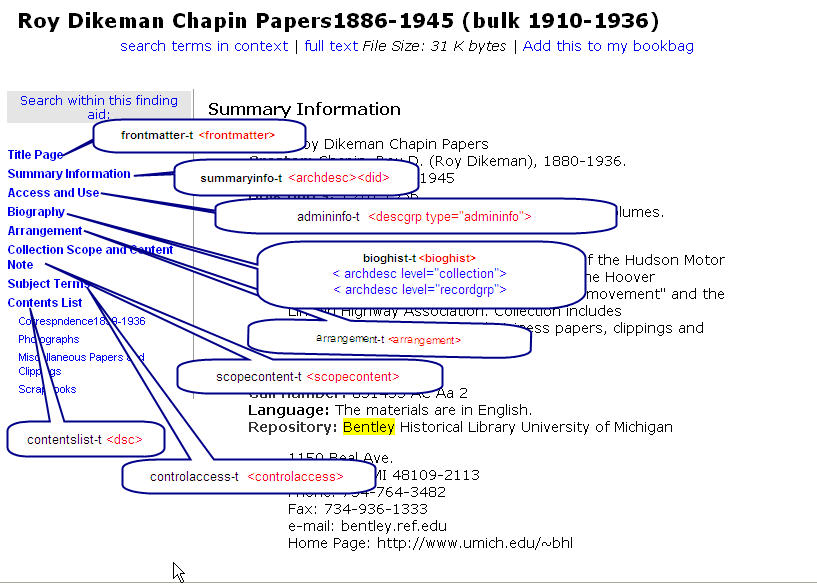

| + | The Finding Aids Class is in many ways similar in behavior to Text Class. Access minimally includes full text searching across collections or within a particular collection of Finding Aids, viewing Finding Aids in a variety of display formats, and creation of personal collections ("bookbag") of Finding Aids. | ||

| + | To mount a Finding Aids Collection, you will need to complete the following steps: | ||

| - | + | # [[Preparing_Data and Directories|Prepare your data and set up a directory structure]] | |

| - | + | # [[Finding_Aids_Data_Preparation#Validating_and_Normalizing_Your_Data| Validate and normalize your data]] | |

| - | + | # [[Building the Index |Build the Index]] | |

| - | + | # [[Mounting the Collection Online|Mount the collection online]] | |

| - | + | ||

| - | + | ||

| - | + | ||

| + | ===[[Findaid Class Behaviors Overview]]=== | ||

| - | + | This section describes the basic Findaid Class behaviors. | |

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| + | ===Examples of Findaid Class Implementations and Practices=== | ||

| - | + | This section contains links to public implementations of DLXS Findaid Class as well as documentation on workflow and implementation issues. If you are a member of DLXS and have a collection or resource you would like to add, or wish to add more information about your collection, please edit this section. | |

| - | + | ;[http://quod.lib.umich.edu/cgi/f/findaid/findaid-idx?&page=simple&c=bhlead University of Michigan, Bentley Historical Library Finding Aids] | |

| + | : Search page for Bentley out-of-the-box DLXS 13 implementation. | ||

| - | + | ;[http://bentley.umich.edu/EAD/index.php University of Michigan, Bentley Historical Library Finding Aids Main Entry Page] | |

| - | + | : Main entry page for Bentley Out-of-the-box DLXS 13 implementation. | |

| - | + | ||

| - | + | ;[http://bentley.umich.edu/EAD/eadproject.php Overview of Bentley's workflow process for Finding Aids ] | |

| + | :See also the links in [[#Practical_EAD_Encoding_Issues | Practical EAD Encoding Issues]] for background on the Bentley EAD workflow and encoding practices | ||

| - | + | ;[http://dlc.lib.utk.edu/f/fa/ Unversity of Tennesee Special Collections Libraries] | |

| + | : DLXS Findaid Class version ? | ||

| - | + | ;[http://digital.library.pitt.edu/ead/ University of Pittsburgh, Historic Pittsburgh Finding Aids] | |

| - | + | :DLXS Findaid Class version ? | |

| - | + | ;[http://digital.library.pitt.edu/ead/aboutead.html Background on Pittsburgh Finding Aids workflow] | |

| - | + | : | |

| - | + | ||

| - | + | ;[http://digicoll.library.wisc.edu/wiarchives University of Wisconsin, Archival Resources in Wisconsin: Descriptive Finding Aids] | |

| + | :DLXS Findaid Class version ? | ||

| - | + | ;[http://discover.lib.umn.edu/findaid University of Minnesota Libraries, Online Finding Aids] | |

| - | + | :DLXS Findaid Class version ? | |

| - | + | ;[https://wiki.lib.umn.edu/Staff/FindingAidsInEAD/ EAD Implementation at the University of Minnesota] | |

| + | : | ||

| - | + | ;[http://archives.getty.edu:8082/cgi/f/findaid/findaid-idx?cc=utf8a;c=utf8a;tpl=browse.tpl Getty Research Institute Special Collections Finding Aids] | |

| - | + | : DLXS13. | |

| - | + | ;[http://archives.getty.edu:8082/cgi/f/findaid/findaid-idx?cc=iastaff;c=iastaff;tpl=browse.tpl J. Paul Getty Trust Institutional Archives Finding Aids] | |

| + | : DLXS13. | ||

| - | + | ==Working with the EAD== | |

| - | + | ===EAD 2002 DTD Overview=== | |

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | === EAD 2002 DTD Overview === | + | |

These instructions assume that you have already encoded your finding aids files in the XML-based [http://www.loc.gov/ead/ EAD 2002 DTD]. If you have finding aids encoded using the older EAD 1.0 standard or are using the SGML version of EAD2002, you will need to convert your files to the XML version of EAD2002. When converting from SGML to XML a number of character set issues may arise. See | These instructions assume that you have already encoded your finding aids files in the XML-based [http://www.loc.gov/ead/ EAD 2002 DTD]. If you have finding aids encoded using the older EAD 1.0 standard or are using the SGML version of EAD2002, you will need to convert your files to the XML version of EAD2002. When converting from SGML to XML a number of character set issues may arise. See | ||

| - | [[Data_Conversion_and_Preparation#Unicode.2C_XML.2C_and_Normalization| Data Conversion and Preparation: Unicode,XML, and Normalization]] | + | [[Data_Conversion_and_Preparation#Unicode.2C_XML.2C_and_Normalization| Data Conversion and Preparation: Unicode,XML, and Normalization]]. |

Resources for converting from EAD 1.0 to EAD2002 and/or from SGML EAD to XML EAD are available from: | Resources for converting from EAD 1.0 to EAD2002 and/or from SGML EAD to XML EAD are available from: | ||

| - | * The Society of American Archivists EAD Tools page:http://www.archivists.org/saagroups/ead/tools.html | + | * The Society of American Archivists EAD Tools page: http://www.archivists.org/saagroups/ead/tools.html |

| - | * Library of Congress EAD conversion | + | * Library of Congress EAD conversion tools: http://lcweb2.loc.gov/music/eadmusic/eadconv12/ead2002_r.htm |

| + | |||

| + | If you use a conversion program such as the one supplied by the Library of Congress, make sure you read the documentation, and change the settings according to your local practices before converting a large number of EADS. For example if you use the LC converter, you probably will want to change the xsl that inserts the string <span class="greentext">"hdl:loc" </span>in the eadid so that the output follows your local practices. | ||

| + | |||

Other good sources of information about EAD encoding practices and practical issues involved with EADs are: | Other good sources of information about EAD encoding practices and practical issues involved with EADs are: | ||

| Line 76: | Line 73: | ||

* EAD2002 tag library http://www.loc.gov/ead/tglib/index.html | * EAD2002 tag library http://www.loc.gov/ead/tglib/index.html | ||

* The Society of American Archivists EAD Help page: http://www.archivists.org/saagroups/ead/ | * The Society of American Archivists EAD Help page: http://www.archivists.org/saagroups/ead/ | ||

| - | * Various EAD Best Practice Guidelines listed on the Society of American Archivists EAD essentials page: | + | * Various EAD Best Practice Guidelines listed on the Society of American Archivists EAD essentials page: http://www.archivists.org/saagroups/ead/essentials.html (the links to BPGs are at the bottom of the page) |

* The EAD listserv http://listserv.loc.gov/listarch/ead.html | * The EAD listserv http://listserv.loc.gov/listarch/ead.html | ||

| - | == Practical EAD Encoding Issues == | + | Sources of information about more general issues such as user studies can be found in: |

| + | |||

| + | http://www.library.uiuc.edu/archives/features/workpap.php | ||

| + | |||

| + | ===Practical EAD Encoding Issues=== | ||

The EAD standard was designed as a loose standard in order to accommodate the large variety in local practices for paper finding aids and make it easy for archives to convert from paper to electronic form. As a result, conformance with the EAD standard still allows a great deal of variety in encoding practices. | The EAD standard was designed as a loose standard in order to accommodate the large variety in local practices for paper finding aids and make it easy for archives to convert from paper to electronic form. As a result, conformance with the EAD standard still allows a great deal of variety in encoding practices. | ||

| Line 87: | Line 88: | ||

More information on the Bentley's encoding practices and workflow: | More information on the Bentley's encoding practices and workflow: | ||

| - | * Overview of Bentley's workflow process for Finding Aids http://bentley.umich.edu/EAD/ | + | * Overview of Bentley's workflow process for Finding Aids http://bentley.umich.edu/EAD/eadproject.php |

| - | * Description of Bentley Finding Aids and their presentation on the web http://bentley.umich.edu/EAD/ | + | * Description of Bentley Finding Aids and their presentation on the web http://bentley.umich.edu/EAD/system.php |

| - | * Bentley MS Word EAD templates and macros http://bentley.umich.edu/EAD/bhlfiles. | + | * Bentley MS Word EAD templates and macros http://bentley.umich.edu/EAD/bhlfiles.php |

| - | * Description of EAD tags used in Bentley EADs http://bentley.umich.edu/EAD/bhltags. | + | * Description of EAD tags used in Bentley EADs http://bentley.umich.edu/EAD/bhltags.php |

| + | |||

| - | === Types of changes to accomodate differing encoding practices and/or interface changes === | + | ====Types of changes to accomodate differing encoding practices and/or interface changes==== |

* Custom preprocessing | * Custom preprocessing | ||

| Line 108: | Line 110: | ||

* Modify CSS | * Modify CSS | ||

| - | ===Specific Encoding Issues=== | + | ====Specific Encoding Issues==== |

There are a number of encoding issues that may affect the data preparation, indexing, searching, and rendering of your finding aids. Some of them are: | There are a number of encoding issues that may affect the data preparation, indexing, searching, and rendering of your finding aids. Some of them are: | ||

* Preprocessing and Data Prep issues | * Preprocessing and Data Prep issues | ||

| - | ** | + | ** <span class="redtext"><eadid> should be less than about 20 characters in length</span> |

** [[Attribute ids must be unique within the entire collection ]] | ** [[Attribute ids must be unique within the entire collection ]] | ||

** If you use attribute ids and corresponding targets within your EADs preparedocs.pl may need to be modified. | ** If you use attribute ids and corresponding targets within your EADs preparedocs.pl may need to be modified. | ||

| - | ** | + | ** [[Character Encoding issues]] |

** UTF-8 Byte Order Marks (BOM) should be removed from EADs prior to concatenation | ** UTF-8 Byte Order Marks (BOM) should be removed from EADs prior to concatenation | ||

** XML processing instructions should be removed from EADs prior to concatenation | ** XML processing instructions should be removed from EADs prior to concatenation | ||

| - | ** Multiline DOCTYPE declarations are | + | ** Multiline DOCTYPE declarations are not properly handled the data prep scripts in release 13 and earlier (without August 24, 2007 patch). |

| - | ** If your DOCTYPE declaration contains entities, you need to modify the appropriate * | + | ** If your DOCTYPE declaration contains entities, you need to modify the appropriate *dcl files accordingly. See $DLXSROOT/prep/s/samplefa/samplefa.ead2002.entity.example.dcl for an example ) |

** Out-of-the-box <dao> handling may need to be modified for your needs | ** Out-of-the-box <dao> handling may need to be modified for your needs | ||

| - | + | * Fabricated region issues (some of these involve XSL as well) | |

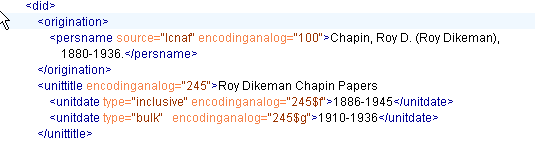

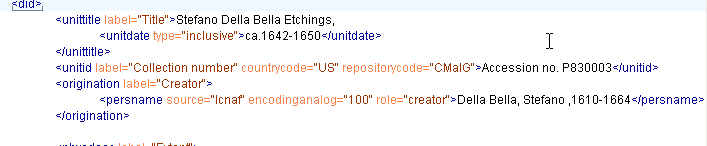

| - | *Fabricated region issues (some of these involve XSL as well) | + | ** If your <unititle> element precedes your <origination> element in <span class="unixcommand">the top level <did>, you will have to modify the maintitle fabricated region query in *.extra.srch </span> See [[Mounting_Finding_Aids:_Release_14/Workshop_working_copy#Title_of_Finding_Aid_does_not_show_up| Troubleshooting:Title of Finding Aid does not show up]] |

| - | ** If your <unititle> element precedes your <origination> element in <span class="unixcommand">the top level <did>, you will have to modify the maintitle fabricated region query in | + | ** If you do not use a <frontmatter> element, you will either have to either a) create and populate frontmatter elements in your EADs manually, or b) run your EADs through some preprocessing XSL to create and populate frontmatter elements, or c) you will have to create a fabricated region to provide an appropriate "Title Page" region based on the <eadheader> and you may also need to change the XSL and/or subclass FindaidClass to change the code that handles the Title Page region. |

| - | ** If you do not use a <frontmatter> element, you will have to create a fabricated region to provide an appropriate "Title Page" region based on the <eadheader> and you may also need to change the XSL and/or subclass FindaidClass to change the code that handles the Title Page region. | + | |

* Table of Contents and Focus Region issues | * Table of Contents and Focus Region issues | ||

** If you do not use a <frontmatter> element you may have to make the changes mentioned above to get the title page to show in the table of contents and when the user clicks on the "Title Page" link in the table of contents | ** If you do not use a <frontmatter> element you may have to make the changes mentioned above to get the title page to show in the table of contents and when the user clicks on the "Title Page" link in the table of contents | ||

** If your encoding practices for <biohist> differ from the Bentley's, you may need to make changes in findaidclass.cfg or create a subclass of FindaidClass and override FindaidClass:: GetBioghistTocHead, and/or change the appropriate XSL files. | ** If your encoding practices for <biohist> differ from the Bentley's, you may need to make changes in findaidclass.cfg or create a subclass of FindaidClass and override FindaidClass:: GetBioghistTocHead, and/or change the appropriate XSL files. | ||

| - | * If you want <relatedmaterial> | + | ** If you want <relatedmaterial> and/or <separatedmaterial> to show up in the table of contents (TOC) on the left hand side of the Finding Aids, you may have to modify findaidclass.cfg and make other modifications to the code. This also applies if there are other sections of the finding aid not listed in the out-of-the-box findaidclass.cfg %gSectHeadsHash. |

| - | + | ** See also [[Customizing_Findaid_Class#Working_with_the_table_of_contents|Customizing Findaid Class: Working with the table of contents]] | |

| - | * | + | |

| - | * | + | |

| + | * XSL issues | ||

| + | ** If you have encoded <unitdate>s as siblings of <unittitle>s, you may have to modify the appropriate XSL templates. | ||

** If you want the middleware to use the <head> element for labeling sections instead of the default hard-coded values in findaidclass.cfg, you may need to change fabricated regions and/or make changes to the XSL and/or possibly modify findaidclass.cfg or subclass FindaidClass. | ** If you want the middleware to use the <head> element for labeling sections instead of the default hard-coded values in findaidclass.cfg, you may need to change fabricated regions and/or make changes to the XSL and/or possibly modify findaidclass.cfg or subclass FindaidClass. | ||

| - | |||

==Preparing Data and Directories== | ==Preparing Data and Directories== | ||

| - | |||

| - | |||

===Set Up Directories and Files for Data Preparation=== | ===Set Up Directories and Files for Data Preparation=== | ||

| + | You will need to set up a directory structure where you plan to store your EAD2002 XML source files, your object files (used by xpat for indexing), index files (including region index files)and other information such as data dictionaries, and files you use to prepare your data. | ||

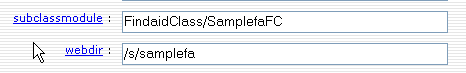

| - | + | The convention used by DLXS is to use subdirectories named with the first letter of the collection id and the collection name:$DLXSROOT/xxx/{c}/{coll}/ where $DLXSROOT is the "tree" where you install all DLXS components, {c} is the first letter of the name of the collection you are indexing, and {coll} is the collection ID of the collection you are indexing. For example, if your collection ID is "bhlead" and your DLXSROOT is "/l1", you will place the Makefile in /l1/bin/b/bhlead/ , e.g., /l1/bin/b/bhlead/Makefile. See the [[Directory Structure |DLPS Directory Conventions]] section and [http://www.dlxs.org/training/workshop200707/overview/dirstructure.html Workshop discussion of Directory Conventions]for more information. | |

| - | + | ||

| - | + | ||

| - | The convention used by DLXS is to use subdirectories named with the first letter of the collection id and the collection name:$DLXSROOT/xxx/{c}/{coll}/ where $DLXSROOT is the "tree" where you install all DLXS components, {c} is the first letter of the name of the collection you are indexing, and {coll} is the collection ID of the collection you are indexing. For example, if your collection ID is "bhlead" and your DLXSROOT is "/l1", you will place the Makefile in /l1/bin/b/bhlead/ , e.g., /l1/bin/b/bhlead/Makefile. See the DLPS Directory Conventions section for more information. | + | |

When deciding on your collection id consider that it needs to be unique across all classes to enable cross-collection searching. So you don't want both a text class collection with a collid of "my_coll" and a finding aid class collection with a collection id of "my_coll". You will also probably want to make your collection ids rather short and make sure they don't contain any special characters, since they will also be used for sub-directory names. | When deciding on your collection id consider that it needs to be unique across all classes to enable cross-collection searching. So you don't want both a text class collection with a collid of "my_coll" and a finding aid class collection with a collection id of "my_coll". You will also probably want to make your collection ids rather short and make sure they don't contain any special characters, since they will also be used for sub-directory names. | ||

| - | + | The Makefile we provide along with most of the data preparation scripts supplied with DLXS assume the directory structure described below. We recommend you follow these conventions. | |

| - | + | ||

| - | We recommend you | + | * Specialized scripts for collection-specific data preparation or preprocessing are stored in $DLXSROOT/bin/{c}/{coll}/ where $DLXSROOT is the "tree" where you install all DLXS components, {c} is the first letter of the name of the collection you are indexing, and {coll} is the collection ID of the collection you are indexing. For example, if your collection ID is "bhlead" and your DLXSROOT is "/l1", you will place the Makefile in /l1/bin/b/bhlead/ , e.g., /l1/bin/b/bhlead/Makefile. The Makefile and preparedocs.pl which can be customized for a specific collection are stored in this directory. See the DLPS Directory Conventions section for more information. |

| - | * | + | * General processing utilities that can be applied to any collection for Findaid Class data prep are stored in $DLXSROOT/bin/f/findaid. |

| - | * | + | * Raw Finding aids should be stored in $DLXSROOT/prep/{c}/{coll}/data/. |

| + | * Doctype declarations, data dictionary and fabricated region templates, and other files for preparing your data should be in $DLXSROOT/prep/{c}/{coll}/. Unlike the contents of other directories, everything in prep should be expendable after indexing. The Makefile stores temporary/intermediate files here as well. | ||

| + | * After running all the targets in the Makefile, the finalized, concatenated XML file for your finding aids collection will be created in $DLXSROOT/obj/{c}/{coll}/ , e.g., /l1/obj/b/bhlead/bhlead.xml. | ||

| + | * After running all the targets in the Makefile, the index, region and data dictionary files will be stored in $DLXSROOT/idx/{c}/{coll}/ , e.g., /l1/idx/b/bhlead/bhlead.idx. These will be updated as the index related targets in the Makefile are run. See the XPAT documentation for more on these types of files. | ||

| - | + | ====Fixing paths==== | |

| - | + | The installation script should have changed all instances of /l1/ to your $DLXSROOT and all bang prompts "#!/l/local/bin/perl" to your location of perl. However, you may wish to check the following scripts: | |

| - | + | ||

| - | |||

* $DLXSROOT/bin/f/findaid/output.dd.frag.pl | * $DLXSROOT/bin/f/findaid/output.dd.frag.pl | ||

* $DLXSROOT/bin/f/findaid/inc.extra.dd.pl | * $DLXSROOT/bin/f/findaid/inc.extra.dd.pl | ||

| - | * $DLXSROOT/bin/ | + | * $DLXSROOT/bin/f/findaid/fixdoctype.pl |

| - | + | * $DLXSROOT/bin/s/samplefa/preparedocs.pl | |

| - | * $DLXSROOT/bin/ | + | |

| - | + | You also might want to check that the path to the shell executable is correct in | |

| - | + | * $DLXSROOT/bin/f/findaid/validateeach.sh | |

| - | + | ||

| - | + | If you use the Makefile in $DLXSROOT/bin/s/samplefa you should check that the paths in the Makefile are correct for the locations of xpat, oxs, and osgmlnorm as installed on your system. These are the Make varibles that should be checked: | |

* XPATBINDIR | * XPATBINDIR | ||

* OSX | * OSX | ||

| - | * OSGMLNORM | + | * OSGMLNORM |

| - | + | ||

| - | + | ====Step by step instructions for setting up Directories for Data Preparation==== | |

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | + | ||

| - | ====Step by step instructions for setting up Directories for Data | + | |

| - | + | You can use the scripts and files from the sample finding aids collection "samplefa" as a basis for creating a new collection. | |

| - | + | ||

| - | + | <div class="tip">DLXS_TIP | |

| + | *'''What is "/w/workshopfa"?''' | ||

| + | *'''How do I use the examples for my own collections?''' | ||

| - | + | The instructions and examples in this section are designed for use at the DLXS workshop http://www.dlxs.org/training/workshops.html | |

| - | + | If you are not at the workshop, and want to use these instructions on your own collections, in the instructions that follow you would use /{c}/{coll} instead of /w/workshopfa where {c} is the first letter of your collection id and {coll} is your collection id. So for example if your collection id was mycoll instead of | |

| - | + | cp $DLXSROOT/prep/s/samplefa/samplefa.extra.srch $DLXSROOT/prep/w/workshopfa/workshopfa.extra.srch | |

| - | </ | + | you would do |

| + | |||

| + | cp $DLXSROOT/prep/s/samplefa/samplefa.extra.srch $DLXSROOT/prep/m/mycoll/mycoll.extra.srch | ||

| + | |||

| + | </div> | ||

| + | |||

| + | This documentation will make use of the concept of the $DLXSROOT, which is the place at which your DLXS directory structure starts. We generally use /l1/. | ||

| + | |||

| + | To check your <span class="unixcommand">$DLXSROOT</span>, type the following command at the command prompt: | ||

| + | |||

| + | echo $DLXSROOT | ||

| + | |||

| + | |||

| + | <div class="tip">DLXS_TIP | ||

| + | With Release 14, you can use the $DLXSROOT/bin/f/findaid/setup_newcoll command to automatically do all the steps in setting up files and directories as described in [[Mounting_a_Finding_Aids_Collection#Set_Up_Directories_and_Files_for_Data_Preparation|Set Up Directories and Files for Data Preparation]] and [[Mounting_a_Finding_Aids_Collection#Set_Up_Directories_and_Files_for_XPAT_Indexing|Set Up Directories and Files for XPAT Indexing]]. To set up the workshopfa collection based on samplefa (after making sure your $DLXSROOT environment variable is set as described above) run this command: | ||

| + | $DLXSROOT/bin/f/findaid/setup_newcoll -c workshopfa -s $DLXSROOT/prep/s/samplefa/data | ||

| + | |||

| + | More information on the setup_newcoll script can be found by clicking [[setup_newcoll_manpage|here]] or invoking the man page: | ||

| + | $DLXSROOT/bin/f/findaid/setup_newcoll --man | ||

| + | |||

| + | You can use setup_newcoll '''instead''' of all the steps that follow in this section | ||

| + | |||

| + | </div> | ||

The <span class="unixcommand">prep</span> directory under <span class="unixcommand">$DLXSROOT</span> is the space for you to take your encoded finding aids and "package them up" for use with the DLXS middleware. Create your basic directory <span class="unixcommand">$DLXSROOT/prep/w/workshopfa</span> and its <span class="unixcommand">data</span> subdirectory with the following command: | The <span class="unixcommand">prep</span> directory under <span class="unixcommand">$DLXSROOT</span> is the space for you to take your encoded finding aids and "package them up" for use with the DLXS middleware. Create your basic directory <span class="unixcommand">$DLXSROOT/prep/w/workshopfa</span> and its <span class="unixcommand">data</span> subdirectory with the following command: | ||

| - | |||

| - | |||

mkdir -p $DLXSROOT/prep/w/workshopfa/data | mkdir -p $DLXSROOT/prep/w/workshopfa/data | ||

| - | |||

| - | |||

Move into the <span class="unixcommand">prep</span> directory with the following command: | Move into the <span class="unixcommand">prep</span> directory with the following command: | ||

| - | |||

| - | |||

cd $DLXSROOT/prep/w/workshopfa | cd $DLXSROOT/prep/w/workshopfa | ||

| - | + | This will be your staging area for all the things you will be doing to your EADs, and ultimately to your collection. At present, all it contains is the <span class="unixcommand">data</span> subdirectory you created a moment ago. Unlike the contents of other collection-specific directories, everything in <span class="unixcommand">prep</span> should be ultimately expendable in the production environment. | |

| - | + | ||

| - | This will be your staging area for all the things you will be doing to your | + | |

Copy the necessary files into your <span class="unixcommand">data</span> directory with the following commands: | Copy the necessary files into your <span class="unixcommand">data</span> directory with the following commands: | ||

| - | |||

| - | |||

cp $DLXSROOT/prep/s/samplefa/data/*.xml $DLXSROOT/prep/w/workshopfa/data/. | cp $DLXSROOT/prep/s/samplefa/data/*.xml $DLXSROOT/prep/w/workshopfa/data/. | ||

| - | |||

| - | |||

We'll also need a few files to get us started working. They will need to be copied over as well, and also have paths adapted and collection identifiers changed. Follow these commands: | We'll also need a few files to get us started working. They will need to be copied over as well, and also have paths adapted and collection identifiers changed. Follow these commands: | ||

| - | |||

| - | + | cp $DLXSROOT/prep/s/samplefa/samplefa.ead2002.dcl $DLXSROOT/prep/w/workshopfa/workshopfa.ead2002.dcl | |

| - | + | cp $DLXSROOT/prep/s/samplefa/samplefa.concat.ead.dcl $DLXSROOT/prep/w/workshopfa/workshopfa.concat.ead.dcl | |

| - | cp $DLXSROOT/prep/s/samplefa/samplefa. | + | |

| - | cp $DLXSROOT/prep/s/samplefa/samplefa. | + | |

mkdir -p $DLXSROOT/obj/w/workshopfa | mkdir -p $DLXSROOT/obj/w/workshopfa | ||

mkdir -p $DLXSROOT/bin/w/workshopfa | mkdir -p $DLXSROOT/bin/w/workshopfa | ||

| - | cp $DLXSROOT/bin/s/samplefa/preparedocs.pl $DLXSROOT/bin/w/workshopfa/. | + | cp $DLXSROOT/bin/s/samplefa/preparedocs.pl $DLXSROOT/bin/w/workshopfa/preparedocs.pl |

cp $DLXSROOT/bin/s/samplefa/Makefile $DLXSROOT/bin/w/workshopfa/Makefile | cp $DLXSROOT/bin/s/samplefa/Makefile $DLXSROOT/bin/w/workshopfa/Makefile | ||

| - | + | Make sure you check and edit if necessary the perl bang prompt and the paths to your shell and directories in these files: | |

| - | + | * $DLXSROOT/bin/f/findaid/stripdoctype.pl | |

| + | * $DLXSROOT/bin/f/findaid/fixdoctype.pl | ||

| + | * $DLXSROOT/bin/f/findaid/validateeach.sh | ||

| + | * $DLXSROOT/bin/w/workshopfa/preparedocs.pl | ||

| - | + | * $DLXSROOT/bin/w/workshopfa/Makefile | |

| - | + | With the ready-to-go ead2002 encoded finding aids files in the <span class="unixcommand">data</span> directory, we are ready to begin the preparation process. This will include: | |

| - | + | # Validating the files individually against the EAD 2002 DTD | |

| - | + | # Concatenating the files into one larger XML file | |

| + | # Validating the concatenated file against the ''dlxsead2002'' DTD | ||

| + | # "Normalizing" the concatenated file. | ||

| + | # Validating the normalized concatenated file against the ''dlxsead2002'' DTD | ||

| - | + | These steps are generally handled via the <span class="unixcommand">Makefile</span> in <span class="unixcommand">$DLXSROOT/bin/s/samplefa</span> which we have copied to $DLXSROOT/bin/w/workshopfa. [[release14 Makefile |Example Makefile]]. | |

| - | + | <div class="tip">DLXS_TIP: | |

| + | Make sure you changed your copy of the Makefile to reflect /w/workshopfa instead of /s/samplefa and that your $DLXSROOT is set correctly in the Makefile. You will want to change lines 1-3 accordingly | ||

| + | |||

| + | 1 DLXSROOT = /l1 | ||

| + | 2 NAMEPREFIX = samplefa | ||

| + | 3 FIRSTLETTERSUBDIR = s | ||

| - | + | </div> | |

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | + | <div class="note"> Tip: Be sure not to add any space after the workshopfa or w. The Makefile ignores space immediately before and after the equals sign but treats all other space as part of the string. If you accidentally put a space after the FIRSTLETTERSUBDIR = s , you will get an error like "[validateeach] Error 127" or " Can't open $DLXSROOT/prep/w*.xml: No such file or directory at $DLXSROOT/bin/f/findaid/fixdoctype.pl line 25." | |

| - | + | If you look closely at the first line of what the Makefile reported to standard output (see below) you will | |

| + | see that the Makefile will get confused about file paths and instead of running the command: | ||

| - | |||

| - | + | $DLXSROOT/bin/f/findaid/validateeach.sh | |

| - | + | -d $DLXSROOT/prep/w/workshopfa/data/ | |

| - | + | -x $DLXSROOT/misc/sgml/xml.dcl | |

| - | + | -t $DLXSROOT/prep/w/workshopfa/workshopfa.ead2002.dcl | |

| - | + | It will complain that the file paths don't make sense: | |

| - | / | + | $DLXSROOT/bin/f/findaid/validateeach.sh |

| + | -d $DLXSROOT/prep/w /workshopfa/data/ | ||

| + | -x $DLXSROOT/misc/sgml/xml.dcl | ||

| + | -t $DLXSROOT/prep/w /workshopfa/workshopfa .ead2002.dcl | ||

| + | working on $DLXSROOT/prep/w*.xml | ||

| + | Can't open $DLXSROOT/prep/w*.xml: No such file or directory at $DLXSROOT/bin/f/findaid/fixdoctype.pl line 25. | ||

| - | + | It looks for xml files in $DLXSROOT/prep/w instead of $DLXSROOT/prep/w/workshopfa/data and exits. | |

| - | + | </div> | |

| - | |||

| - | |||

| - | |||

| - | |||

| - | |||

| - | If you | + | <div class="note"> |

| + | Further note on editing the Makefile: If you modify or write your own Make targets, you need to make sure that a real "tab" starts each command line rather than spaces. The easiest way to check for these kinds of errors is to use "cat -vet Makefile" to show all spaces, tabs and newlines | ||

| + | </div> | ||

| + | |||

| + | The installation program should have changed the locations of the various binaries in the Makefile to match your answers in the installation process. However, its a good idea to check to make sure that the locations of the various binaries to have been changed to match your installation. | ||

| - | |||

| - | |||

* Change XPATBINDIR = /l/local/bin/ to the location of the <span class="unixcommand">xpat</span> binary in your installation | * Change XPATBINDIR = /l/local/bin/ to the location of the <span class="unixcommand">xpat</span> binary in your installation | ||

* Change the location of the <span class="unixcommand">osx</span> binary from | * Change the location of the <span class="unixcommand">osx</span> binary from | ||

| Line 297: | Line 302: | ||

to the location in your installation | to the location in your installation | ||

| - | Tip: oxs and osgmlnorm are installed as part of the OpenSP package. If you are using linux, make sure that the OpenSP package for your version of linux is installed and make sure the paths above are changed to match your installation. If you are using Solaris you will have to install (and possibly compile) OpenSP. You may also need to make sure the $LD_LIBRARY_PATH environment variable is set so that the OpenSP programs can find the required libraries. For troubleshooting such problems the unix '''ldd''' utility is invaluble. | + | <div class="tip"> Tip: oxs and osgmlnorm are installed as part of the OpenSP package. If you are using linux, make sure that the OpenSP package for your version of linux is installed and make sure the paths above are changed to match your installation. If you are using Solaris you will have to install (and possibly compile) OpenSP. You may also need to make sure the $LD_LIBRARY_PATH environment variable is set so that the OpenSP programs can find the required libraries. For troubleshooting such problems the unix '''ldd''' utility is invaluble. See also links to OpenSP package on the tools page: [[Useful Tools]] |

| - | + | </div> | |

---- | ---- | ||

| - | === Set Up Directories and Files for XPAT Indexing === | + | ===Set Up Directories and Files for XPAT Indexing=== |

| + | |||

| + | If you are not following these instructions at the DLXS workshop, please substitute /{c}/{coll} where {c} is the first letter of your collection id | ||

| + | and {coll}is your collection id for any instance of /w/workshopfa | ||

| + | and substitute {coll} wherever you see "workshopfa" in the following instructions. | ||

First, we need to create the rest of the directories in the '''workshopfa''' environment with the following commands: | First, we need to create the rest of the directories in the '''workshopfa''' environment with the following commands: | ||

| - | |||

| - | |||

mkdir -p $DLXSROOT/idx/w/workshopfa | mkdir -p $DLXSROOT/idx/w/workshopfa | ||

| - | </ | + | The <span class="unixcommand">bin</span> directory we created when we prepared directories for data preparation holds any scripts or tools used for the collection specifically; <span class="unixcommand">obj</span> ( created earlier) holds the "object" or XML file for the collection, and <span class="unixcommand">idx</span> holds the XPAT indexes. Now we need to finish populating the directories. |

| - | + | <pre> | |

| + | cp $DLXSROOT/prep/s/samplefa/samplefa.blank.dd $DLXSROOT/prep/w/workshopfa/workshopfa.blank.dd | ||

| + | cp $DLXSROOT/prep/s/samplefa/samplefa.extra.srch $DLXSROOT/prep/w/workshopfa/workshopfa.extra.srch | ||

| + | </pre> | ||

| - | |||

| - | + | '''Both of these files need to be edited '''to reflect the new collection name and the paths to your particular directories. Failure to change even one line in one file can result in puzzling errors, because the scripts ''are'' working, just not necessarily in the directories you are looking at. | |

| - | + | cd $DLXSROOT/prep/w/workshopfa | |

| - | + | After editing the files, you can check to make sure you changed all the "samplefa" strings with the following command: | |

| - | grep -l "samplefa" <span class="unixcommand">$DLXSROOT/prep/w/workshopfa/</span><nowiki>*</nowiki> | + | grep -l "samplefa" <span class="unixcommand">$DLXSROOT/prep/w/workshopfa/</span><nowiki>*</nowiki> |

| - | + | You also need to check that "/l1/" has been replacedby whatever $DLXSROOT is on your server. If you don't have an /l1 directory on your server (which is very likely if you are not here using a DLPS machine) you can check with: | |

| - | grep -l "l1" <span class="unixcommand">$DLXSROOT/prep/w/workshopfa/</span><nowiki>*</nowiki> | + | grep -l "l1" <span class="unixcommand">$DLXSROOT/prep/w/workshopfa/</span><nowiki>*</nowiki> |

| - | + | [[#top|Top]] | |

| - | == | + | ==Finding Aids Data Preparation== |

| - | + | [[DLXS Wiki|Main Page]] > [[Mounting Collections: Class-specific Steps]] > [[Mounting a Finding Aids Collection]] > Finding Aids Data Preparation | |

| - | + | ||

| - | |||

| - | < | + | ===Overview of Data Preparation and Indexing Steps=== |

| + | |||

| + | '''Data Preparation''' | ||

| + | |||

| + | # Validate the files individually against the EAD ''2002'' DTD<br />'''make validateeach'''<br /> | ||

| + | # Concatenate the files into one larger XML file<br />'''make prepdocs'''<br /> | ||

| + | # Validate the concatenated file against the ''dlxsead2002'' DTD:<br />'''make validate'''<br /> | ||

| + | # Normalize the concatenated file.<br />'''make norm'''<br /> | ||

| + | # Validate the normalized concatenated file against the ''dlxsead2002'' DTD <br />'''make validate'''<br /> | ||

| + | |||

| + | The end result of these steps is a file containing the concatenated EADs wrapped in a <COLL> element which validates against the dlxsead2002 and is ready for indexing: | ||

| + | |||

| + | <COLL><br /><ead><eadheader><eadid>1</eadid>...</eadheader>... content</ead><br /><ead><eadheader><eadid>2</eadid>...</eadheader>... content</ead><br /><ead><eadheader><eadid>3</eadid>...</eadheader>... content</ead><br /></COLL> | ||

| + | |||

| + | |||

| + | '''WARNING!''' If there are extra characters or some other problem with the part of the program that strips out the xml declaration and the doctype declaration the file will end up like: | ||

| + | |||

| + | |||

| + | <COLL><br />'''baddata'''<ead><eadheader><eadid>1</eadid>...</eadheader>... content</ead><br />'''baddata'''<ead><eadheader><eadid>2</eadid>...</eadheader>... content</ead><br />'''baddata'''<ead><eadheader><eadid>3</eadid>...</eadheader>... content</ead><br /></COLL> | ||

| + | |||

| + | In this case you will get "character data not allowed" or similar errors during the make validate step. You can troubleshoot by looking at the concatenated file and/or checking your original EADs. | ||

| + | |||

| + | '''Indexing''' | ||

| + | |||

| + | # '''make singledd''' indexes all the words in the concatenated file. | ||

| + | # '''make xml''' indexes the XML structure by reading the DTD. Validates as it indexes. | ||

| + | # '''make post''' builds and indexes fabricated regions based on the XPAT queries stored in the workshopfa.extra.srch file. | ||

| + | ===Preprocessing=== | ||

| + | ===Validating and Normalizing Your Data=== | ||

| + | |||

| + | ==== <span id="dataprep_step1">'''Step 1: Validating the files individually against the EAD 2002 DTD'''</span> ==== | ||

cd $DLXSROOT/bin/w/workshopfa | cd $DLXSROOT/bin/w/workshopfa | ||

| Line 342: | Line 379: | ||

| - | + | The Makefile runs the following command: | |

| - | + | ||

| - | + | % $DLXSROOT/bin/f/finadaid/validateeach.sh | |

| - | What's happening: The makefile is running the | + | |

| + | What's happening: The makefile is running the bourne-shell script [[validateeach.sh.r14|validateeach.sh]] in the $DLXSROOT/bin/f/findaid directory. The script processes each *.xml file in the data directory. For each file, it creates a temporary file without the public DOCTYPE declaration, and then runs <span class="unixcommand">onsgmls</span> on each of the resulting XML files in the <span class="unixcommand">data</span> subdirectory to make sure they conform with the EAD 2002 DTD. If validation errors occur, error files will be in the <span class="unixcommand">data</span> subdirectory with the same name as the finding aids file but with an extension of <span class="unixcommand">.err</span>. If there are validation errors, fix the problems in the source XML files and re-run. | ||

Check the error files by running the following commands | Check the error files by running the following commands | ||

| - | |||

| - | |||

ls -l $DLXSROOT/prep/w/workshopfa/data/*err | ls -l $DLXSROOT/prep/w/workshopfa/data/*err | ||

| - | + | if there are any *err files, you can look at them with the following command: | |

less $DLXSROOT/prep/w/workshopfa/data/*err | less $DLXSROOT/prep/w/workshopfa/data/*err | ||

| - | + | =====Common error messages and solutions:===== | |

| - | + | ;onsgmls<nowiki>:</nowiki> Command not found | |

| + | :The location of the onsgmls binary is not in your $PATH. | ||

| - | + | ;entityref errors such as "general entity 'foobar' not defined" | |

| + | :If you use entityrefs in your EADs, you may see errors relating to problems resolving entities. [[Example entityref errors]]. The solution is to add the entityref declarations to the doctype declaration in these two files: | ||

| - | + | *;$DLXSROOT/prep/s/samplefa/samplefa.ead2002.dcl | |

| + | : This is the doctype declaration used by the validateeach.sh script that points to the EAD2002 DTD. | ||

| - | < | + | *;$DLXSROOT/prep/s/samplefa/samplefa.concat.ead.dcl |

| + | : This is the doctype declaration that points to the dlxs2002 dtd. The dlxs2002 dtd essentially the dlxs2002 dtd with modifications to provide for multiple eads within one file. It is used by the "make validate" target of the Makefile to validate the concatenated file containing all of your EADs. | ||

| + | |||

| + | *See $DLXSROOT/prep/s/samplefa/samplefa.ead2002.entity.example.dcl for an example of adding entityrefs to your docytype declaration files. | ||

| + | |||

| + | ==== <span id="dataprep_step2">'''Step 2: Concatentating the files into one larger XML file (and running some preprocessing commands) '''</span> ==== | ||

| - | |||

cd $DLXSROOT/bin/w/workshopfa | cd $DLXSROOT/bin/w/workshopfa | ||

make prepdocs | make prepdocs | ||

| - | + | The Makefile runs the following command: | |

| - | + | $DLXSROOT/bin/w/workshopfa/preparedocs.pl | |

| - | $DLXSROOT/bin/w/workshopfa/preparedocs.pl $DLXSROOT/prep/w/workshopfa/data $DLXSROOT/obj/w/workshopfa/workshopfa.xml $DLXSROOT/prep/w/workshopfa/logfile.txt | + | -d $DLXSROOT/prep/w/workshopfa/data |

| + | -o $DLXSROOT/obj/w/workshopfa/workshopfa.xml | ||

| + | -l $DLXSROOT/prep/w/workshopfa/logfile.txt | ||

| - | + | This runs the preparedocs.pl script on all the files in the specified data directory and writes the output to the workshopfa.xml file in the appropriate /obj subdirectory. It also outputs a logfile to the /prep directory: | |

The Perl script does two sets of things: | The Perl script does two sets of things: | ||

| Line 387: | Line 431: | ||

'''Concatenating the files ''' | '''Concatenating the files ''' | ||

| - | The script finds all XML files in the <span class="unixcommand">data</span> subdirectory,and then strips off | + | The script finds all XML files in the <span class="unixcommand">data</span> subdirectory,and then strips off the XML declaration and doctype declaration from each file before concatenating them together. It also wraps the concatenated EADs in a <COLL> tag . The end result looks like: |

| Line 396: | Line 440: | ||

| - | <COLL><br />baddata<ead><eadheader><eadid>1</eadid>...</eadheader>... content</ead><br />baddata<ead><eadheader><eadid>2</eadid>...</eadheader>... content</ead><br />baddata<ead><eadheader><eadid>3</eadid>...</eadheader>... content</ead><br /></COLL> | + | <COLL><br />'''baddata'''<ead><eadheader><eadid>1</eadid>...</eadheader>... content</ead><br />'''baddata'''<ead><eadheader><eadid>2</eadid>...</eadheader>... content</ead><br />'''baddata'''<ead><eadheader><eadid>3</eadid>...</eadheader>... content</ead><br /></COLL> |

This will cause the document to be invalid since the dlxsead2002.dtd does not allow anything between the closing tag of one </ead> and the opening tag of the next one <ead> | This will cause the document to be invalid since the dlxsead2002.dtd does not allow anything between the closing tag of one </ead> and the opening tag of the next one <ead> | ||

| Line 408: | Line 452: | ||

'''Preprocessing steps''' | '''Preprocessing steps''' | ||

| - | The perl program also does some preprocessing on all the files. | + | The perl program also does some preprocessing on all the files. Some of these steps are customized to the needs of the Bentley. |

The preprocessing steps are: | The preprocessing steps are: | ||

| - | |||

* finds all id attributes and prepends a number to them | * finds all id attributes and prepends a number to them | ||

| - | * adds a prefix string "dao-bhl" to all DAO links | + | * removes XML declaration |

| + | * removes DOCTYPE declaration | ||

| + | * removes XML processing instructions | ||

| + | * removes the utf8 Byte Order Mark | ||

| + | Bentley specific processing: | ||

| + | * adds a prefix string "dao-bhl" to all DAO links | ||

* removes empty <span class="unixcommand">persname</span>, <span class="unixcommand">corpname</span>, and <span class="unixcommand">famname</span> elements | * removes empty <span class="unixcommand">persname</span>, <span class="unixcommand">corpname</span>, and <span class="unixcommand">famname</span> elements | ||

| + | |||

| + | <div class="tip">DLXS_TIP:You should look at the perl code and determine if you need to modify it so it is appropriate for your encoding practices. You probably will want to comment out the Bentley specific processing</div> | ||

The output of the combined concatenation and preprocessing steps will be the one collection named xml file which is deposited into the obj subdirectory. | The output of the combined concatenation and preprocessing steps will be the one collection named xml file which is deposited into the obj subdirectory. | ||

| - | If your collections need to be transformed in any way, or if you do not want the transformations to take place (the DAO changes, for example), edit preparedocs.pl file to effect the changes. Some changes you may want to make include: | + | If your collections need to be transformed in any way, or if you do not want the transformations to take place (the DAO changes, for example), you can edit preparedocs.pl file to effect the changes. Some changes you may want to make include: |

| - | * Changing the algorithm used to make id attibute unique. For example if your encoding practices use id attributes and targets, the out-of-the-box algorithm will remove the relationship between the attributes and targets. One possible modification might be to modify the algorithm to prepend the eadid or filename to all id and target attributes. | + | * Changing the algorithm used to make id attibute unique. For example if your encoding practices use id attributes and targets, the out-of-the-box algorithm will remove the relationship between the attributes and targets. One possible modification might be to modify the algorithm to prepend the eadid or filename to all id and target attributes. (See the commented out code in preparedocs.pl for an example of how to do this) |

| - | + | ||

| - | + | '''Changing the default sort order or indexing only certain files in the data directory''' | |

| - | + | The default order for search results in Findaid Class is the order they were concatenated. If you want to change the default order or if you have a reason to only index some of the files in your <span>data</span> directory,you can make a list of the files you wish to concatenate and put the list in a file in <span class="greentext">$DLXSROOT/prep/w/workshopfa</span> called <span class="greentext">list_of_eads.</span> | |

| + | You can then run the | ||

| + | <span class="greentext">"make prepdocslist"</span> | ||

| - | < | + | command which will run the <span class="greentext">preparedocs.pl</span> with the<span class="greentext"> -i inputfilelist</span> flag instead of the <span class="greentext">-d dir</span> flag. This tells the program to read a list of files instead of processing all the xml files in the specified directory. To create your list of files you can write a script which looks at the eads for some element that you want to sort by and then outputs a list of filenames sorted by that order, you can then either name the file <span class="greentext">list_of_eads.</span> or |

| + | pass that filname to <span class="greentext">preparedocs.pl -i</span> command so it would concatenate the files in the order listed. | ||

| - | + | For more information on options to the <span class="greentext">preparedocs.pl</span> script, run the command: | |

| - | + | <span class="greentext"> $DLXSROOT/bin/s/samplefa/preparedocs.pl --man </span> | |

| - | + | ||

| - | |||

| - | |||

| - | + | ---- | |

| - | + | ==== <span id="dataprep_step3">'''Step 3: Validating the concatenated file against the dlxsead2002 DTD'''</span> ==== | |

| - | |||

| - | |||

| - | |||

| - | + | make validate | |

| - | + | The Makefile runs the following command: | |

| + | onsgmls -wxml -s -f $DLXSROOT/prep/w/workshopfa/workshopfa.errors | ||

| + | $DLXSROOT/misc/sgml/xml.dcl | ||

| + | $DLXSROOT/prep/w/workshopfa/workshopfa.concat.ead.dcl | ||

| + | $DLXSROOT/obj/w/workshopfa/workshopfa.xml | ||

| - | + | This runs the onsgmls command against the concatenated file using the dlxs2002dtd, and writes any errors to the workshopfa.errors file in the appropriate subdirectory in $DLXSROOT/prep/c/collection.. | |

| + | [[validate.R14|More details]] | ||

| + | Note that we are running this using <span class="unixcommand">'''workshopfa.concat.ead.dcl'''</span> not <span class="unixcommand">'''workshopfa.ead2002.dcl'''</span>. The '''workshopfa.concat.ead.dcl''' file points to '''$DLXSROOT/misc/sgml/dlxsead2002.ead''' which is the ''dlxsead2002'' DTD. The ''dlxsead2002'' DTD is exactly the same as the ''EAD2002'' DTD, but adds a wrapping element, <span class="unixcommand"><COLL></span>, to be able to combine more than one <span class="unixcommand">ead</span> element, more than one finding aid, into one file. It is, of course, a good idea to validate the file now before going further. | ||

| - | |||

| - | + | Run the following command | |

| - | + | ls -l $DLXSROOT/prep/w/workshopfa/workshopfa.errors | |

| + | |||

| + | If there is a workshopfa.errors file then run the following command to look at the errors reported | ||

less $DLXSROOT/prep/w/workshopfa/workshopfa.errors | less $DLXSROOT/prep/w/workshopfa/workshopfa.errors | ||

| - | |||

| - | + | =====Common common causes of error messages and solutions===== | |

| - | + | ;make<nowiki>:</nowiki> onsgmls<nowiki>:</nowiki> Command not found | |

| + | :OSGMLNORM variable in Makefile does not point to correct location of onsgmls for your installation or openSP is not installed. | ||

| - | + | ;If there were no errors when you ran "make validateeach" but you are now seeing errors | |

| + | :there was very likely a problem with the preparedocs.pl processing. | ||

| - | + | * The DOCTYPE declaration did not get completely removed. (Scripts prior to Release 13 August 24 patch, don't always remove multiline DOCTYPE declarations) | |

| + | * There was a UTF-8 Byte Order Mark at the begginning of one or more of the concatenated files | ||

| - | + | ;onsgmls:/l1/dev/tburtonw/misc/sgml/xml.dcl<nowiki>:</nowiki>1<nowiki>:</nowiki>W<nowiki>:</nowiki> SGML declaration was not implied | |

| + | :The above error can be ignored. | ||

| + | <div class="tip">Warning: If you see any other errors '''STOP!''' You need to determine the cause of the problem, fix it, and rerun the steps until there are no errors from make validate. If you continue with the next steps in the process with an invalid xml document, the errors will compound and it will be very difficult to trace the cause of the problem. </div> | ||

| - | < | + | ==== <span id="dataprep_step4">'''Step 4: Normalizing the concatenated file'''</span> ==== |

| - | + | make norm | |

| - | + | The Makefile runs a series of copy statements and two main commands: | |

| - | + | ||

| + | 1.) /l/local/bin/osgmlnorm -f $DLXSROOT/prep/w/workshopfa/workshopfa.osgmlnorm.errors | ||

| + | $DLXSROOT/misc/sgml/xml.dcl | ||

| + | $DLXSROOT/prep/w/workshopfa/workshopfa.concat.ead.dcl | ||

| + | $DLXSROOT/obj/w/workshopfa/workshopfa.xml.prenorm > $DLXSROOT/obj/w/workshopfa/workshopfa.xml.postnorm | ||

| - | |||

| - | + | 2.) /l/local/bin/osx -E0 -bUTF-8 -xlower -xempty -xno-nl-in-tag | |

| + | -f $DLXSROOT/prep/w/workshopfa/workshopfa.osx.errors | ||

| + | $DLXSROOT/misc/sgml/xml.dcl | ||

| + | $DLXSROOT/prep/w/workshopfa/workshopfa.concat.ead.dcl | ||

| + | $DLXSROOT/obj/w/workshopfa/workshopfa.xml.postnorm > $DLXSROOT/obj/w/workshopfa/workshopfa.xml.postnorm.osx | ||

| - | + | These commands ensure that your collection data is normalized. What this means is that any attributes are put in the order in which they were defined in the DTD. Even though your collection data is XML and attribute order should be irrelevant (according to the XML specification), due to a bug in one of the supporting libraries used by xmlrgn (part of the indexing software), attributes must appear in the order that they are defined in the DTD. If you have "out-of-order" attributes and don't run make norm, you will get ''"invalid endpoints"'' errors during the make post step. | |

| - | + | <div class="tip">Tip: Step one, which normalizes the document writes its errors to <span class="unixcommand">$DLXSROOT/prep/w/workshopfa/workshopfa.osgmlnorm.errors</span>. Be sure to check this file.</div> | |

| - | + | less $DLXSROOT/prep/w/workshopfa/workshopfa.osgmlnorm.errors | |

| - | + | ||

| - | + | Step 2, which runs osx to convert the normalized document back into XML produces lots of error messages which are written to $DLXSROOT/prep/w/workshopfa/workshopfa.osx.errors. These will also result in the following message on standard output: | |

| + | make: [norm] Error 1 (ignored) | ||

| + | These errors are caused because we are using an XML DTD (the EAD 2002 DTD) and osx is using it to validate against the SGML document created by the osgmlnorm step. | ||

| + | <span class="redtext">These are the only errors which may generally be ignored.</span> | ||

| + | However, if the next recommended step, which is to run "make validate" again reveals an invalid document, you may want to rerun osx and look at the errors for clues. (Only do this if you are sure that the problem is not being caused by XML processing instructions in the documents as explained below) | ||

| - | </ | + | ==== <span id="dataprep_step5">'''Step 5: Validating the normalized file against the dlxsead2002 DTD'''</span> ==== |

| - | + | make validate2 | |

| - | + | Check the resulting error file: | |

| - | + | less $DLXSROOT/prep/w/workshopfa/workshopfa.errors2 | |

| - | ''' | + | We run this step again to make sure that the normalization process did not produce an invalid document. This is necessary because under some circumstances the "make norm" step can result in invalid XML. One known cause of this is the presense of XML processing instructions. For example: '''"<?Pub Caret1?>"'''. Although XML processing instructions are supposed to be ignored by any XML application that does not understand them, the problem is that when we use sgmlnorm and osx, which are SGML tools, they end up munging the output XML. The preparedocs.pl script used in the "make prepdocs" step should have removed any XML processing instructions. |

| + | <div class="tip">Tip: If this second make validate step fails, but the "make validate" step before "make norm" succeeded, there is some kind of a problem with the normalization process. You may want to start over by running "make clean" and then going through steps 1-4 again. If that doesn't solve the problem you may want to check your EADs to make sure they do not have XML processing instructions and if they don't, you will then need to look at the error messages from the second make validate.</div> | ||

| - | + | ==Building the Index== | |

| + | [[DLXS Wiki|Main Page]] > [[Mounting Collections: Class-specific Steps]] > [[Mounting a Finding Aids Collection]] > Building the Index | ||

| - | + | ===Indexing Overview=== | |

| - | + | Indexing is relatively straightforward once you have followed the steps to set up data and directories and prepared and normalized your data as described in | |

| + | *[[Mounting_Finding_Aids:_Release_14/Workshop_working_copy#Step_by_step_instructions_for_setting_up_Directories_for_Data_Preparation|Step by step instructions for setting up Directories for Data Preparation]], | ||

| + | *[[Mounting_Finding_Aids:_Release_14/Workshop_working_copy#Set_Up_Directories_and_Files_for_XPAT_Indexing|Set Up Directories and Files for XPAT Indexing]], | ||

| + | *[[Finding Aids Data Preparation#Validating_and_Normalizing_Your_Data|Validating and Normalizing Your Data]], | ||

| - | + | To create an index for use with the Findaid Class interface, you will need to index the words in the collection, then index the XML (the structural metadata, if you will), and then finally "fabricate" regions based on a combination of elements (for example, defining what the "main entry" is, without adding a <MAINENTRY> tag around the appropriate <AUTHOR> or <TITLE> element). | |

| - | + | The main work in the indexing step is making sure that the fabricated regions in the workshopfa.extra.srch file match the characteristics of your collection. | |

| - | + | ||

| - | + | <div class="tip"> Tip: If the final "make validate" step in [[Finding Aids Data Preparation#Step 5: Validating the normalized file against the dlxsead2002 DTD|Validating the normalized file against the dlxsead2002 DTD]] produced errors, you will need to fix the problem before running the indexing steps. Attempting to index an invalid document will lead to indexing problems and/or corrupt indexes.</div> | |

| - | + | The <span class="unixcommand">Makefile</span> in the <span class="unixcommand">$DLXSROOT/bin/w/workshopfa</span> directory contains the commands necessary to build the index, and can be executed easily. | |

| - | + | cd $DLXSROOT/bin/w/workshopfa | |

| - | + | The following commands can be used to make the index: | |

| - | '''make | + | '''make singledd''' indexes words in the EADs that have been concatenated into one large file for a collection. |

| - | + | '''make xml''' indexes the XML structure by reading the DTD. It validates as it indexes. | |

| + | '''make post''' builds and indexes fabricated regions based on the XPAT queries stored in the workshopfa.extra.srch file. Because every collection is different, the *extra.srch file will probably need to be adapted for your collection. If you try to index/build fabricated regions from elements not used in your finding aids collection, you will see errors like: | ||

| + | |||

| + | <Error>No information for region famname in the data dictionary.</Error> | ||

| + | Error found: <Error>syntax error before: ")</Error> | ||

| + | |||

| + | when you use the make post command | ||

| + | |||

| + | ===Step by Step Instructions for Indexing=== | ||

| + | |||

| + | ====<span id="indexing_step1">'''Step 1: Indexing the text'''</span>==== | ||

| + | Index all the words in the file of concatenated EADs with the following command: | ||

| + | |||

| + | <pre> | ||

cd $DLXSROOT/bin/w/workshopfa | cd $DLXSROOT/bin/w/workshopfa | ||

make singledd | make singledd | ||

| + | </pre> | ||

| - | + | ''The make file runs the following commands:'' | |

| - | cp | + | <pre> |

| - | + | cp $DLXSROOT/prep/w/workshopfa/workshopfa.blank.dd | |

| - | /l/local/xpat/bin/xpatbld -m 256m -D | + | $DLXSROOT/idx/w/workshopfa/workshopfa.dd |

| - | cp | + | /l/local/xpat/bin/xpatbld -m 256m -D $DLXSROOT/idx/w/workshopfa/workshopfa.dd |

| - | + | cp $DLXSROOT/idx/w/workshopfa/workshopfa.dd | |

| + | $DLXSROOT/prep/w/workshopfa/workshopfa.presgml.dd | ||

| + | </pre> | ||

| - | < | + | ====<span id="indexing_step2">'''Step 2: Indexing the the XML'''</span>==== |

| + | Index all the elements and attributes listed in the ead DTD that occur in the file of concatenated EADs by running the following command: | ||

| + | |||

| + | |||

| + | <pre> | ||

make xml | make xml | ||

| + | </pre> | ||

| - | + | ''The makefile runs the following commands:'' | |

| - | cp | + | <pre> |

| - | + | cp $DLXSROOT/prep/w/workshopfa/workshopfa.presgml.dd | |

| - | /l/local/xpat/bin/xmlrgn -D | + | $DLXSROOT/idx/w/workshopfa/workshopfa.dd |

| - | + | /l/local/xpat/bin/xmlrgn -D $DLXSROOT/idx/w/workshopfa/workshopfa.dd | |

| - | + | $DLXSROOT/misc/sgml/xml.dcl | |

| - | + | $DLXSROOT/prep/w/workshopfa/workshopfa.concat.ead.dcl | |

| + | $DLXSROOT/obj/w/workshopfa/workshopfa.xml | ||

| - | cp | + | cp $DLXSROOT/idx/w/workshopfa/workshopfa.dd |

| - | + | $DLXSROOT/idx/w/workshopfa/workshopfa.prepost.dd | |

| + | </pre> | ||

| - | |||

| + | After running this step, if you wish, you can see the indexed regions by issuing the following commands: | ||

| + | xpatu $DLXSROOT/w/workshopfa/workshopfa.dd | ||

| + | >> {ddinfo regionnames} | ||

| + | >> quit | ||

| + | |||

| + | You can also test out the xpat queries in your workshopfa.extra.srch file. See [[Testing Fabricated Regions]] | ||

| + | |||

| + | ====<span id="indexing_step3">'''Step 3: Configuring fabricated regions'''</span>==== | ||

| + | |||

| + | Fabricated regions are set up in the $DLXSROOT/prep/c/collection/collection.extra.srch file. The sample file $DLXSROOT/prep/s/samplefa/samplefa.extra.srch was designed for use with the Bentley's encoding practices. If your encoding practices differ from the Bentley's, or if your collection does not have all the elements that the samplefa.extra.srch xpat queries expect, you will need to edit your *.extra.srch file. | ||

| + | |||

| + | We recommend a combination of the following: | ||

| + | |||

| + | # Iterative work to insure make post does not report errors | ||

| + | # Up front analysis | ||

| + | # Iterative work to insure that searching and rendering work properly with your encoding practices. | ||

| + | |||

| + | Depending on your knowledge of your encoding practices you may prefer to do "up front analysis" first prior to running "make post". On the other hand, running "make post" will give you immediate feedback if there are any obvious errors. | ||

| + | |||

| + | =====<span id="fabregions_post">Run the "make post" and iterate until there are no errors reported.</span>===== | ||

| + | |||

| + | Run the '''"make post"''' step and look at the errors reported. Then modify *.extra.srch and rerun "make post". Repeat this until '''"make post"''' does not report any errors. See [[Mounting_Finding_Aids:_Release_14/Workshop_working_copy#Step_4:_Indexing_fabricated_regions|Step 4 Indexing fabricated regions]] below for information on running "make post." | ||

| + | |||

| + | The most common cause of "make post" errors related to fabricated regions result from a fabricated region being defined which includes an element which is not in your collection. | ||

| + | |||

| + | For example if you do not have any <famname> elements in any of the EADs in your collection and you are using the out-of-the-box samplefa.extra.srch, you will see an error message similare to the one below when xpat tries to index the mainauthor region using this rule below. | ||

| + | |||

| + | "No information for region "foo" | ||

| + | Error found: | ||

| + | <Error>No information for region famname in the data dictionary.</Error> | ||

| + | Error found: | ||

| + | |||

| + | <pre> | ||

| + | ( | ||

| + | (region "persname" + region "corpname" + region "famname" + region "name") | ||

| + | within | ||

| + | (region "origination" within | ||

| + | ( region "did" within | ||

| + | (region "archdesc") | ||

| + | ) | ||

| + | ) | ||

| + | ); | ||

| + | {exportfile /l1/workshop/user11/dlxs/idx/s/samplefa/mainauthor.rgn"}; export;~sync "mainauthor"; | ||

| + | </pre> | ||

| + | |||

| + | So you could edit the rule to eliminate the "famname" element: | ||

| + | <pre> | ||

| + | ( | ||

| + | (region "persname" + region "corpname" + region "name") | ||

| + | within | ||

| + | (region "origination" within | ||

| + | ( region "did" within | ||

| + | (region "archdesc") | ||

| + | ) | ||

| + | ) | ||

| + | ); | ||

| + | {exportfile /l1/workshop/user11/dlxs/idx/s/samplefa/mainauthor.rgn"}; export;~sync "mainauthor"; | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | See [[Mounting_Finding_Aids:_Release_14/Workshop_working_copy#Common_common_causes_of_error_messages_and_solutions_2|Indexing Fabricated Regions: Common causes of error messages and solutions]] for other examples of "make post" error messages and solutions. | ||

| + | |||

| + | =====<span id="fabregions_analysis">Analysis of your collection</span>===== | ||

| + | You may be able to analyze your collection prior to running make post and determine what changes you want to make in the fabricated regions. If your analysis misses any changes, you can find this out by using the two previous techniques. | ||

| + | |||

| + | * Once you have run "make xml", but before you run "make post", start up xpatu running against the newly created indexes: | ||

| + | |||

| + | xpatu $DLXSROOT/idx/w/workshopfa/workshopfa.dd | ||

| + | |||

| + | then run the command | ||

| + | >> {ddinfo regionnames} | ||

| + | |||

| + | This will give you a list of all the XML elements, and attributes | ||

| + | |||

| + | Alternatively you can create a file called xpatregions and insert the following text: | ||

| + | |||

| + | {ddinfo regionnames} | ||

| + | |||

| + | Then run this command | ||

| + | |||

| + | $ xpatu /l1/dev/tburtonw/idx/w/workshopfa/workshopfa.dd < xpatregions > regions.out | ||

| + | |||

| + | Then you use the "regions.out" file you just created to sort and examine the list of fabricated regions which occur in your finding aids and compare them to the fabricated region queries in your copy of samplefa.extra.srch ( which you copied to workshopfa.extra.srch or collection_name.extra.srch) | ||

| + | |||

| + | |||

| + | =====<span id="fabregions_ui">Exercise the web user interface</span>===== | ||

| + | <div class="tip">It is best to use the other two techniques until "make post" does not report any errors. At that point you can then look for other possible problems with the searching and display which may be caused by differences between your encoding practices and those of the Bentley. (The samplefa.extra.srch fabricated regions definitions are based on the Bentley's encoding practices).</div> | ||

| + | |||

| + | Once make post does not report errors, you can follow the rest of the steps to put your collection on the web. Then carefully exercise the web user interface looking for the following symptoms: | ||

| + | * Searches that don't work properly because they depend on fabricated regions that don't match your encoding practices. | ||

| + | * Rendering that does not work properly. An example is that the name/title of the finding aid may not show up if your <unititle> element precedes your <origination> element in the top level <did>. See also [[Troubleshooting Finding Aids#Title of finding aid does not show up|Title of finding aid does not show up]]. | ||

| + | |||

| + | For more information on regions used for searching and rendering see | ||

| + | * [[Working with Fabricated Regions in Findaid Class]] | ||

| + | |||

| + | ====<span id="indexing_step4">'''Step 4: Indexing fabricated regions'''</span>==== | ||

| + | Index the fabricated regions specified in your workshopfa.extra.srch that occur in the file of concatenated EADs with the following command: | ||

| + | |||

| + | <pre> | ||

make post | make post | ||

| + | </pre> | ||

| - | + | ''The makefile runs the following commands:'' | |

| + | <pre> | ||

cp /l1/workshop/test02/dlxs/prep/w/workshopfa/workshopfa.prepost.dd | cp /l1/workshop/test02/dlxs/prep/w/workshopfa/workshopfa.prepost.dd | ||

/l1/workshop/test02/dlxs/idx/w/workshopfa/workshopfa.dd | /l1/workshop/test02/dlxs/idx/w/workshopfa/workshopfa.dd | ||

| Line 571: | Line 765: | ||

/l1/workshop/test02/dlxs/prep/w/workshopfa/workshopfa.extra.dd | /l1/workshop/test02/dlxs/prep/w/workshopfa/workshopfa.extra.dd | ||

/l1/workshop/test02/dlxs/idx/w/workshopfa/workshopfa.dd | /l1/workshop/test02/dlxs/idx/w/workshopfa/workshopfa.dd | ||

| + | </pre> | ||

| - | + | =====Common common causes of error messages and solutions===== | |

| - | If you get an ''"invalid endpoints"'' message from "make post", the most likely cause is XML processing instructions or some other corruption. The second "make validate" step should have caught these. | + | ;''"invalid endpoints"'' |

| + | :If you get an ''"invalid endpoints"'' message from "make post", the most likely cause is XML processing instructions or some other corruption. The second "make validate" step should have caught these. | ||

| - | < | + | ; "No information for region "foo" |

| + | Error found: | ||

| + | <Error>No information for region famname in the data dictionary.</Error> | ||

| + | Error found: | ||

| + | <Error>syntax error before: ")</Error> | ||

| + | :This is usually caused by the absence of a particular region that is listed in the *.extra.srch file but not present in your collection. For example if you do not have any <famname> elements in any of the EADs in your collection and you are using the out-of-the-box samplefa.extra.srch, you will see the above error message when xpat tries to index the mainauthor region using this rule: | ||

| - | + | ((region "persname" + region "corpname" + region "famname" + region "name") within (region "origination" within | |

| - | + | ( region "did" within (region "archdesc")))); {exportfile "$DLXSROOT/idx/s/samplefa/mainauthor.rgn"}; export; | |

| - | + | ~sync "mainauthor"; | |

| - | + | ||

| - | </ | + | The easiest solution is to modify *extra.srch to match the characteristics of your collection. |

| + | <div class="tip">Tip: An alternative that is useful if you have only a small sample of the EADs you will be mounting and you expect that some of the EADs you will be getting later might have the element that is currently missing from your collection, is to add a "dummy" EAD to your collection. The "dummy" ead should contains all the elements you will ever expect to use (or that are required by the *.extra.srch file). The "dummy" EAD should have all elements except the <eadid> empty.</div> | ||

| - | + | ||

| + | |||

| + | |||

| + | ; Syntax error | ||

| + | Error found: | ||

| + | <Error>syntax error before: ")</Error> | ||

| + | :Sometimes xpat will claim there is a syntax error, when in fact some other error occurred just prior to where it thinks there is a syntax error. For example in the error message for the missing "famname" above, in addition to the "No information for region "famname" error, xpat also reports a syntax error. In this case once you fix the famname error, the false syntax error will go away. However, if there are no other errors reported other than syntax errors, then there is probably a real syntax error. You should always start with the first syntax error reported. In many cases there are unbalanced parenthesis. The easiest way to troubleshoot syntax errors is to start an xpatu session and cut and paste xpat queries from your *.extra.search file one at a time on the command line. (See example hereXXX). | ||

| + | |||

| + | <div class="tip"> Warning! If "make post" produces errors, you need to fix them. Otherwise searching and display of your finding aids may produce inconsistant results and crashes of the cgi script.</div> | ||

| + | See also [[Working with Fabricated Regions in Findaid Class]] | ||

---- | ---- | ||

| - | === Testing the index === | + | ===Testing the index=== |

| - | At this point it is a good idea to do some testing of the newly created index. Invoke xpat with the following | + | At this point it is a good idea to do some testing of the newly created index. Strategically, it is good to test this from a directory other than the one you indexed in, to ensure that relative or absolute paths are resolving appropriately. Invoke xpat with the following command |

xpatu $DLXSROOT/idx/w/workshopfa/workshopfa.dd | xpatu $DLXSROOT/idx/w/workshopfa/workshopfa.dd | ||

| - | Try searching for some likely regions. Its a good idea to test some of the fabricated regions. Here are a few sample queries: | + | For more information about searching, see the XPAT manual. |

| + | |||

| + | Try searching for some likely regions. Its a good idea to test some of the fabricated regions. Here are a few sample queries: | ||

>> region "ead" | >> region "ead" | ||

| Line 611: | Line 823: | ||

>> region "admininfo" | >> region "admininfo" | ||

5: 3 matches | 5: 3 matches | ||

| - | |||

| - | -- | + | [[#top|Top]] |

| + | |||

| + | ==Working with Fabricated Regions in Findaid Class== | ||

| + | |||

| + | ===Fabricated Regions Overview=== | ||

| + | |||

| + | When you run "make xml" , DLXS uses XPAT in combination with xmlrgn and a DTD. This process indexes the elements and attributes in the DTD as "regions," containers of content rather like fields in a database. These separate regions are built into the regions file (collid.rgn) and are identified in the data dictionary (collid.dd). This is what is happening when you are running "make xml". | ||

| + | |||

| + | However, sometimes the things you want to identify collectively aren't so handily identified as elements in the DTD. For example, the Findaid Class search interface can allow the user to search in Names regions. Perhaps for your collection you want Names to include persname, corpname, geoname. By creating an XPAT query that ORs these regions, you can have XPAT index all the regions that satisfy the OR-ed query. For example: | ||

| + | |||

| + | (region "name" + region "persname" + region "corpname" + region "geoname" + | ||

| + | region "famname") | ||

| + | |||

| + | Once you have a query that produces the results you want, you can add an entry to the *.extra.srch file which (when you run the "make post" command) will run the query, create a file for export, export it, and sync it: | ||

| + | |||

| + | {exportfile "$DLXSROOT/idx/c/collid/names.rgn"} export ~sync "names" | ||

| + | |||

| + | ===Why Fabricate Regions?=== | ||

| + | |||

| + | Why fabricate regions? Why not just put these queries in the map file and call them names? While you could, it's probably worth your time to build these succinctly-named and precompiled regions; query errors are more easily identified in the index building than in the CGI, and XPAT searches can be simpler and quicker for terms within the prebuilt regions. | ||

| - | + | The middleware for Findaid Class uses a number of fabricated regions in order to speed up xpat queries and simplify coding and configuration. | |

| - | + | ||

| - | + | Findaid Class uses fabricated regions for several purposes | |

| - | "Fabricated" | + | # To share code with Text Class (e.g. region "main") |

| + | # Fabricated regions for searching (e.g. region "names") | ||

| + | # Fabricated regions to produce the Table of Contents and to implement display of EAD sections as focused regions such as the "Title Page" or "Arrangement" ( See [[Customizing Findaid Class#Working_with_the_table_of_contents |Working with the table of contents]] for more information on the use of fabricated regions for the table of contents.) | ||

| + | # Other regions specifically used in a PI (region "maintitle" is used by the PI <?ITEM_TITLE_XML?> used to display the title of a finding aid at the top of each page) | ||

| - | + | The fabricated region "main" is set to refer to <span class="unixcommand"><ead></span> in FindaidClass with: | |

(region ead); {exportfile "/l1/idx/b/bhlead/main.rgn"}; export; ~sync "main"; | (region ead); {exportfile "/l1/idx/b/bhlead/main.rgn"}; export; ~sync "main"; | ||

| Line 628: | Line 860: | ||

whereas in TextClass "main" can refer to <span class="unixcommand"><TEXT></span>. Therfore, both FindaidClass and TextClass can share the Perl code, in a higher level subclass, that creates searches for "main". | whereas in TextClass "main" can refer to <span class="unixcommand"><TEXT></span>. Therfore, both FindaidClass and TextClass can share the Perl code, in a higher level subclass, that creates searches for "main". | ||